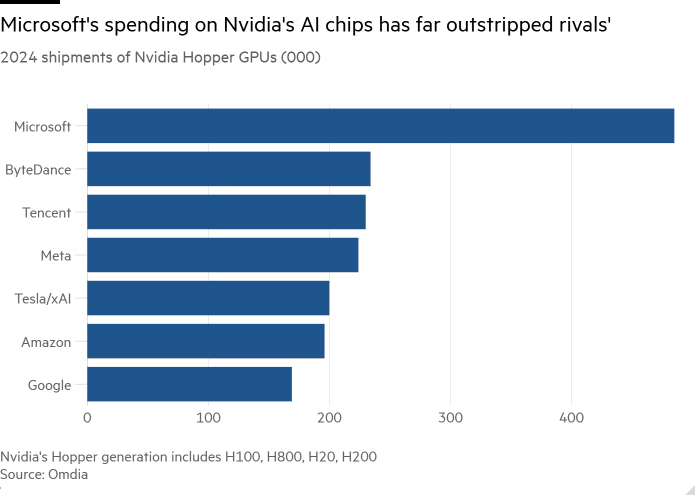

Microsoft has emerged as the largest buyer of NVIDIA's Hopper GPUs this year, purchasing nearly 500,000 units, according to reports from Economic Daily News and the Financial Times. This procurement volume more than doubles that of the second-largest buyer, Meta, which acquired 224,000 units. Microsoft’s aggressive investment in AI infrastructure underscores its ambitions to stay ahead in the rapidly growing AI sector, where demand for high-performance chips continues to surge.

The surge in AI development, spurred by the success of generative AI tools like ChatGPT, has created intense competition for NVIDIA's cutting-edge GPUs. Microsoft’s significant chip stockpile puts it in a strong position to accelerate the development of next-generation AI systems. Other major buyers of NVIDIA's Hopper GPUs include Amazon, Google, ByteDance, and Tencent, along with Microsoft-backed OpenAI.

Despite securing an ample supply of chips, Microsoft faces challenges beyond procurement. According to a separate report by MarketWatch, Microsoft CEO Satya Nadella stated that power consumption, not chip availability, remains the primary bottleneck for the company's AI efforts. While Microsoft has significantly scaled up its chip orders in response to the AI boom, Nadella emphasized that this was a one-time effort, and the company has now met its chip needs.

In contrast, recent rumors suggest Microsoft may be scaling back its orders from NVIDIA. According to Commercial Times, NVIDIA’s next-generation Blackwell architecture chips, specifically the GB200 model, have faced production delays, leading Microsoft to reconsider its procurement plans.

According to market research firm Omdia, the global AI chip market remains dominated by NVIDIA, although competitors are gaining ground. Meta, Amazon, and Google are increasingly investing in custom AI chips to reduce their reliance on NVIDIA. For instance, Amazon is ramping up its deployment of its own AI chips, Trainium and Inferentia, while Microsoft has also accelerated its adoption of in-house accelerators like Maia.

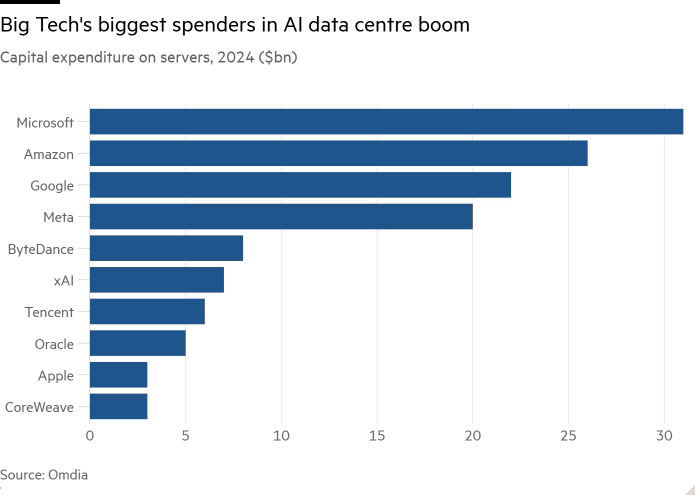

By 2024, total global spending on server infrastructure is expected to reach $229 billion, with Microsoft leading the pack at $31 billion, followed by Amazon at $26 billion. Microsoft’s Azure cloud platform, which is integral to its AI strategy, is being used to train OpenAI's latest GPT-4 models, further cementing the company's role as a key player in the AI ecosystem.

While NVIDIA remains the dominant force in the AI chip market, the growing investments in custom chips from tech giants like Microsoft, Amazon, and Meta suggest that the competitive landscape is evolving rapidly. As companies like Microsoft continue to scale up their AI operations, they will need to balance chip procurement with infrastructure investments to maintain their leadership in the AI race.

+86 191 9627 2716

+86 181 7379 0595

8:30 a.m. to 5:30 p.m., Monday to Friday