-advertisement-

Cerebras Systems announced Cerebras Inference, the world’s fastest AI inference solution, on Tuesday (August 27), claiming it is 20 times faster than Nvidia's offerings. While Nvidia's GPUs are the industry standard and are often accessed through cloud providers to run large language models like ChatGPT, they can be expensive and difficult for smaller firms to access. Cerebras claims its new chips surpass GPU performance, offering faster and more affordable AI inference.

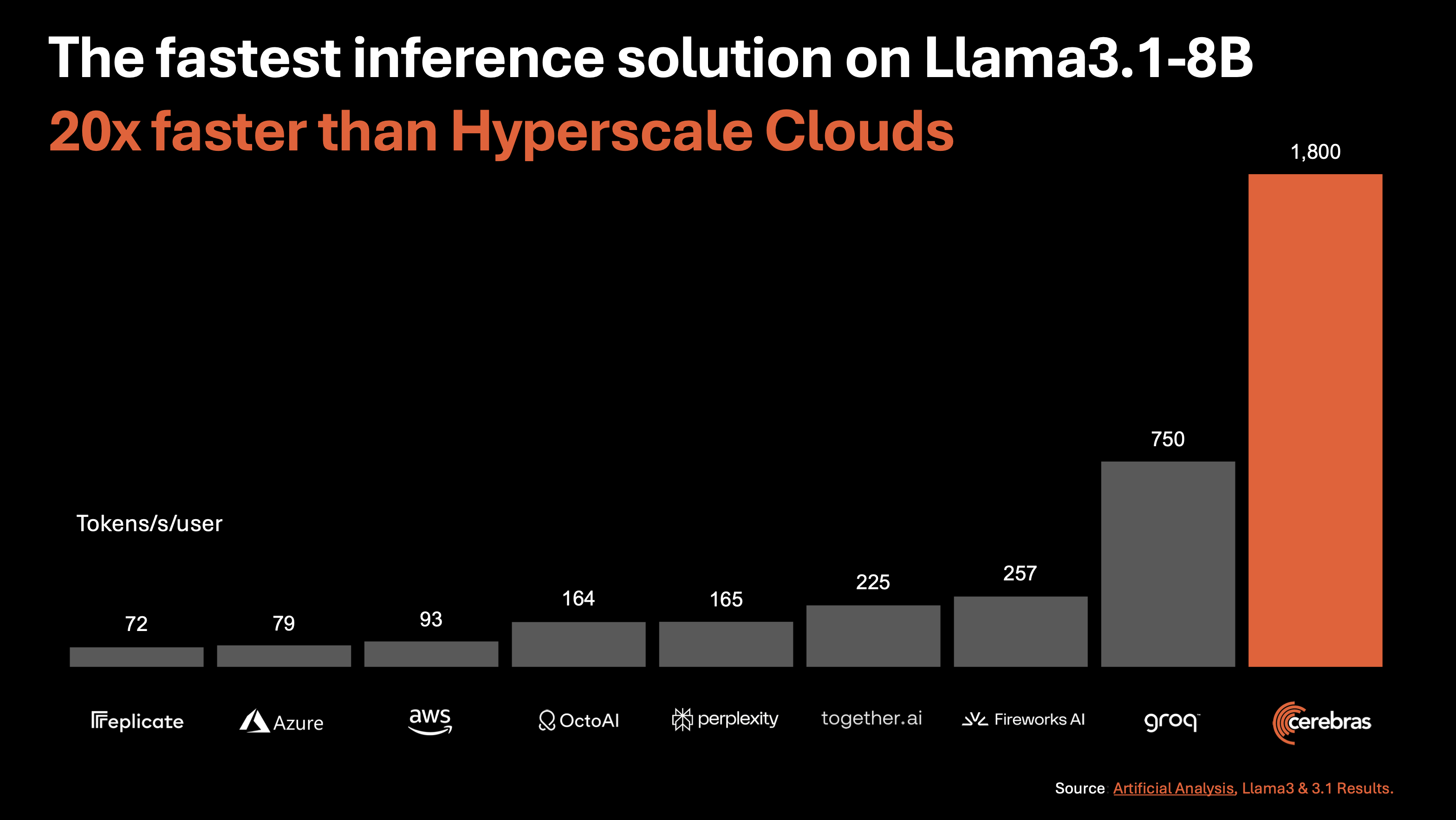

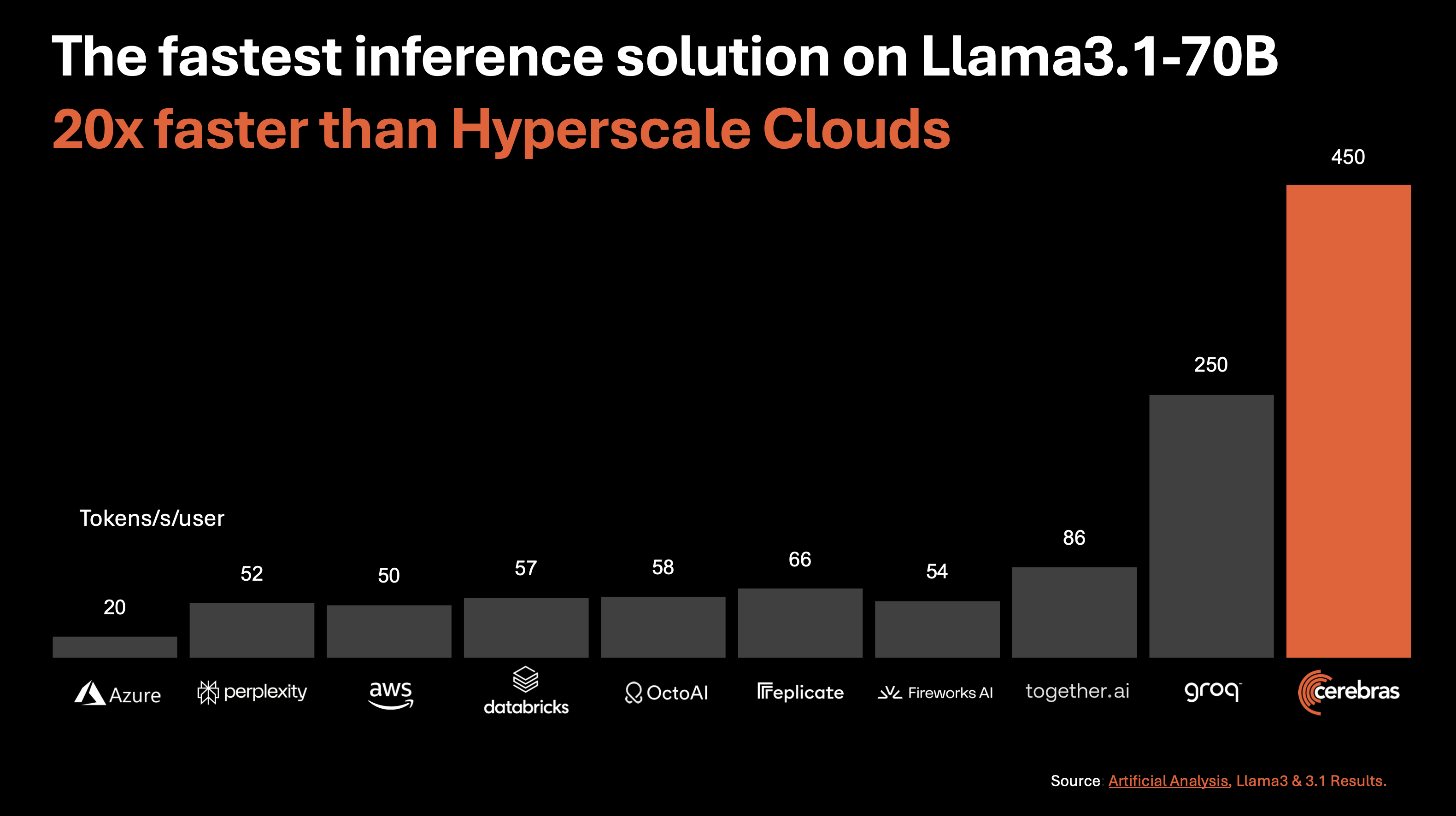

Powered by the third generation Wafer Scale Engine, Cerebras inference runs Llama3.1 20x faster than GPU solutions at 1/5 the price. At 1,800 tokens/s, Cerebras Inference is 2.4x faster than Groq in Llama3.1-8B. For Llama3.1-70B, Cerebras is the only platform to enable instant responses at a blistering 450 tokens/sec. All this is achieved using native 16-bit weights for the model, ensuring the highest accuracy responses.

Cerebras noted that inference is the fastest-growing segment of the AI industry, accounting for 40% of all AI-related workloads in cloud computing. According to CEO Andrew Feldman, the company’s outsized chips deliver more performance than GPUs, which he asserts cannot achieve this level of efficiency.

Nvidia has long dominated the AI computing market with its GPUs and CUDA programming environment, which have locked developers into its ecosystem. However, Cerebras' new Wafer Scale Engine chips, boasting 7,000 times more memory than Nvidia's H100 GPUs, may disrupt this dominance. These chips, part of the CS-3 data center system, are designed to solve critical memory bandwidth issues.

Cerebras is also preparing to go public, having recently filed a confidential prospectus with the SEC.

About Cerebras Systems

Cerebras Systems is a team of pioneering computer architects, computer scientists, deep learning researchers, and engineers of all types. We have come together to accelerate generative AI by building from the ground up a new class of AI supercomputer. Our flagship product, the CS-3 system, is powered by the world’s largest and fastest AI processor, our Wafer-Scale Engine-3. CS-3s are quickly and easily clustered together to make the largest AI supercomputers in the world, and make placing models on the supercomputers dead simple by avoiding the complexity of distributed computing. Leading corporations, research institutions, and governments use Cerebras solutions for the development of pathbreaking proprietary models, and to train open-source models with millions of downloads. Cerebras solutions are available through the Cerebras Cloud and on premise. For further information, visit www.cerebras.ai or follow us on LinkedIn or X.

Editor:Lulu

▼▼▼

Applied Materials receives subpoena from U.S. Department of Justice, faces further scrutiny

Micron said to buy factories from AUO in deal worth up to $620M

'Black Myth: Wukong' sparks trend, boosts demand for memory and graphics cards

TSMC receives $1.95B in support from China, Japan

+86 191 9627 2716

+86 181 7379 0595

8:30 a.m. to 5:30 p.m., Monday to Friday