Chip giant AMD has officially launched its latest artificial intelligence chip, the Instinct MI325X, marking a significant challenge to Nvidia’s dominance in the data center GPU market. The new chip is set to enter production by the end of 2024 and aims to capture a portion of the booming AI market, which AMD estimates will be worth $500 billion by 2028.

As AI technologies like OpenAI’s ChatGPT drive demand for advanced GPUs to power massive data centers, Nvidia has maintained a commanding lead, with more than 90% market share in AI data center chips. However, AMD, traditionally second in the data center GPU market, is now stepping up to directly compete with Nvidia’s high-performance chips, including the upcoming Blackwell series.

The MI325X will go head-to-head with Nvidia’s Blackwell GPUs, expected to begin shipping in early 2024. AMD’s CEO Lisa Su highlighted the growing demand for AI hardware at the product launch, noting that the Instinct MI325X offers up to 40% better inference performance on Meta's Llama AI models compared to Nvidia’s H200 GPU.

One of AMD’s key selling points is its advanced memory technology on the MI325X, which allows it to serve certain AI models faster than Nvidia’s chips. AMD also aims to provide more flexibility to AI developers by enhancing its ROCm software ecosystem, offering an alternative to Nvidia's CUDA programming language, which has long dominated the AI development landscape.

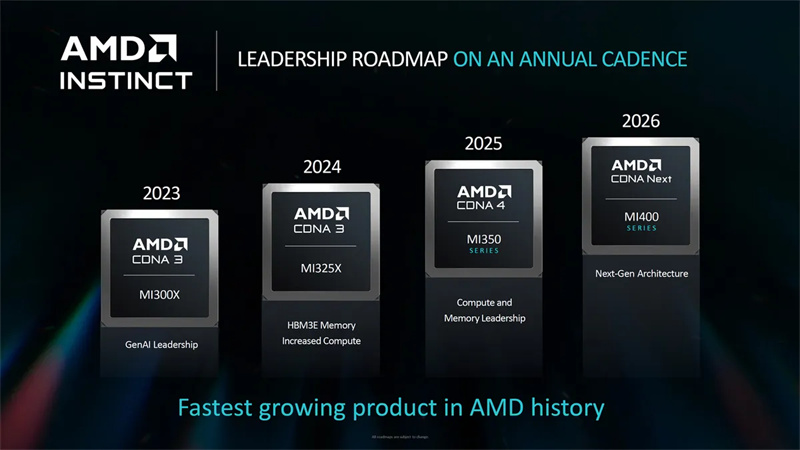

AMD is ramping up its AI chip release schedule to compete more effectively with Nvidia. After launching the MI325X, the company plans to release the MI350 in 2025, followed by the MI400 in 2026, as part of a yearly product cadence. The MI325X follows the MI300X, which started shipping in late 2023.

In addition to the MI325X, AMD also previewed its upcoming Instinct MI355X, which is set to begin production in the second half of 2025. The MI355X will be built on TSMC’s 3nm process and is expected to offer up to 9.2 petaflops of FP4 compute power, rivaling Nvidia’s Blackwell chips. With 288GB of HBM3E memory and 8 TB/s of bandwidth, the MI355X is designed to handle the most demanding AI workloads.

Despite its advancements, AMD still faces challenges in breaking Nvidia’s stronghold on the market. One of the biggest obstacles is Nvidia’s CUDA ecosystem, which locks many AI developers into its platform. However, AMD hopes that continued improvements to its ROCm software will make it easier for developers to transition their models to AMD’s hardware.

Moreover, AMD’s total cost of ownership (TCO) approach aims to attract businesses by offering either better performance at the same price or competitive pricing for the same performance. As more companies look to diversify their AI hardware suppliers, AMD hopes to capture a significant share of the market, particularly with its competitive pricing strategy.

The explosion in AI demand has prompted both AMD and Nvidia to aggressively expand their data center product lines. While AMD has seen a 20% rise in its stock in 2024, Nvidia has surged by over 175%, reflecting the immense value AI has brought to the semiconductor market.

As AMD continues to push its AI and data center GPU roadmap, industry observers are closely watching how its latest products, like the MI325X and MI355X, will fare against Nvidia’s Blackwell family. With AI expected to remain a key driver of growth in the semiconductor industry, the competition between these two tech giants is set to intensify.

+86 191 9627 2716

+86 181 7379 0595

8:30 a.m. to 5:30 p.m., Monday to Friday