It seems like everyone wants to get their hands on Nvidia’s H100 chips these days.

Microsoft and Google, which are building out generative AI-driven search engines, are some of the biggest customers of Nvidia’s H100 chips. Server manufacturers claim to have waited more than six months to receive their latest orders. Venture capital investors are buying up H100 chips for the startups they invest in.

But it’s not just tech companies in search of securing H100s: Saudi Arabia and the UAE have reportedly snapped up thousands of these $40,000 chips to build their own AI applications, according to the Financial Times, which cited unnamed sources.

This steep demand for one chip from one company has led to something of a buying frenzy. “Who’s getting how many H100s and when is top gossip of the valley,” as OpenAI’s Andrej Karpathy put it in a Twitter post.

Even Elon Musk, amid his obsession with fighting Mark Zuckerberg, found time to comment on the scarcity of Nvidia’s chips. (Musk does not identify whether he’s referring to H100 chips—which debuted last year—or Nvidia’s chips in general. But chips for AI are certainly all the rage at the moment.) Musk’s Tesla is spending $1 billion to build a new supercomputer named Dojo, to train its fleet of autonomous vehicles and process the data from them. The Dojo plan began, Musk said, only because Tesla didn’t have enough Nvidia GPUs—graphics processing units, as these chips are called. “Frankly...if they could deliver us enough GPUs, we might not need Dojo,” Musk told investors and analysts on a conference call in July. “They’ve got so many customers. They’ve been kind enough to, nonetheless, prioritize some of our GPU orders.”

If Tesla had been able to receive the number of chips from Nvidia it required, those chips would have gone into specialized computers that would train the vast amount of video data, which, Musk says, is needed to achieve a “generalized solution for autonomy.”

The data needs to be processed somehow. So Dojo is designed to be optimized for video training, not generative AI systems, to process the amount of data needed for self-driving vehicles, Musk said, which matters for achieving autonomous driving that is safer than human driving.

Why do generative AI models depend on Nvidia’s H100 chips?

Large language models (LLMs) are trained on massive amounts of data to generate complex responses to questions. But integrating LLMs into real-world applications like search engines requires a lot of computing power.

In a study, researchers at the University of Washington and the University of Sydney broke down the high costs of running LLMs. Google processes over 99,000 search queries per second. If GPT-3 were to be embedded into every query, and assuming that each query generates 500 tokens, which are objects that represent the right to perform some operation, Google would need roughly 2.7 billion A100 GPUs—an older Nvidia AI chip—to keep up. The cost of these GPUs would exceed $40 billion in capital expenditures alone, the researchers estimated.

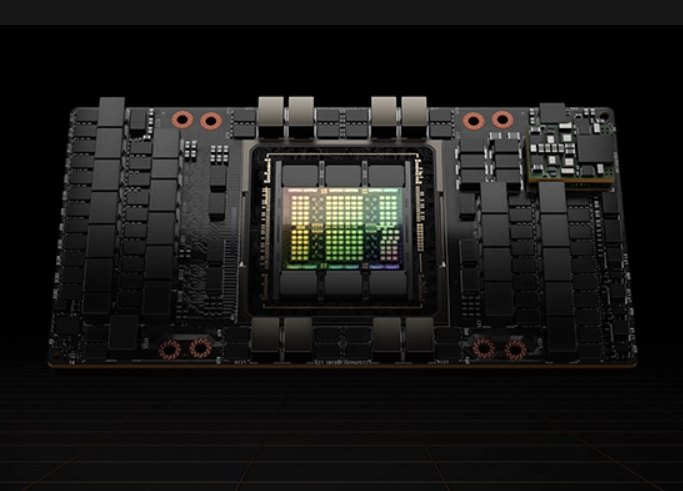

What Google and other companies need is a more powerful chip that performs better at the same price or lower, said Willy Shih, a professor of management practice at Harvard Business School and who previously worked at IBM and Silicon Valley Graphics. Enter the Nvidia H100, named for computer scientist Grace Hopper. The H100 is tailored for generative AI and runs faster than previous models. The more powerful the chips, the faster you can process queries, Shih said.

The demand for high-performing AI chips has been a boon for Nvidia, which commands the market—in part due to luck—as competitors scramble to catch up.

Can Amazon and Google meet the AI chip demand?

As generative AI startups work on scaling up and find themselves running short of H100s, that presents opportunities for competitors—such as Amazon and Google, which are working on building their own Nvidia-like chips—to rise to the occasion. Amazon’s chips are called Inferentia and Tranium; Google’s are Tensor Processing Units.

“It’s one thing to get access to infrastructure. But it’s another thing when, for example, you may want to scale the application, then you suddenly realize that you don’t have enough capacity that’s available,” said Arun Chandrasekaran, a Gartner analyst who focuses on the cloud and AI. “So I think it’s going to take some time for really these challenges to even out.”

+86 191 9627 2716

+86 181 7379 0595

8:30 a.m. to 5:30 p.m., Monday to Friday